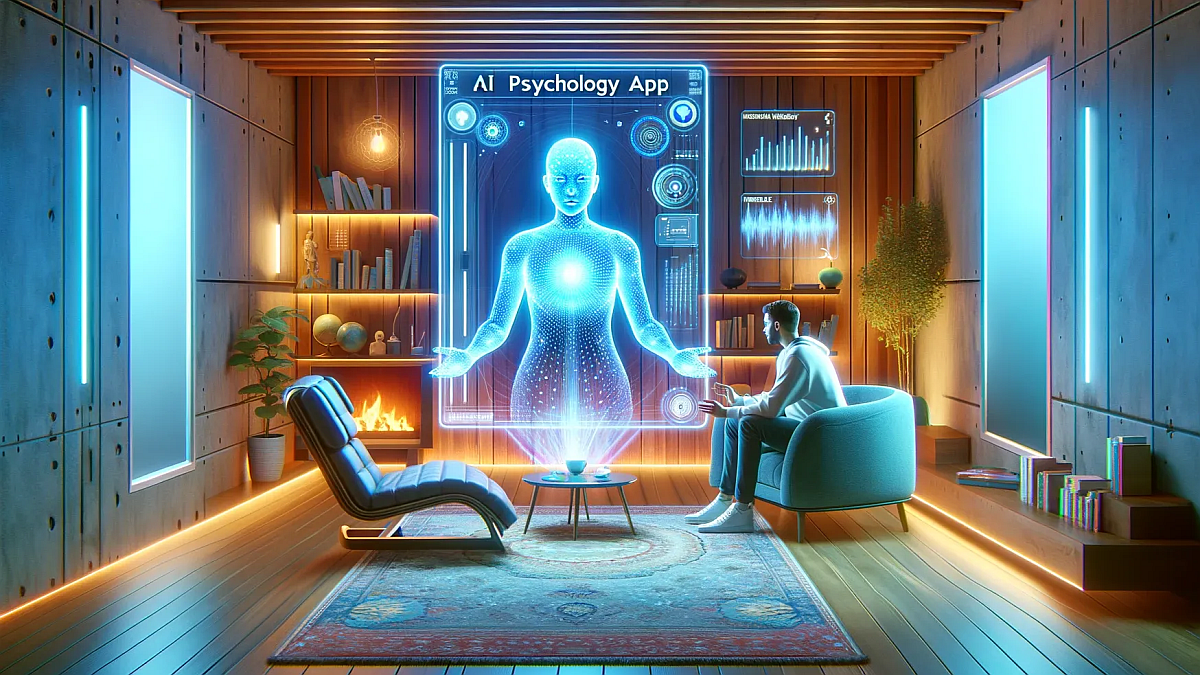

We are in the middle of 2025, the time has passed enough to understand where the adoption of society is mutating regarding the use of IAS. The generative artificial intelligence It has ceased to be a strictly instrumental tool to become an emotional interlocutor.

The latest update of use of GEN AI of the Harvard Business Review It reveals that AI as therapy and company leads the ranking of the 100 most relevant use cases, strongly displacing merely technical applications. This paradigm shift forces us to ask ourselves to what extent we are willing to delegate our inner life in algorithmsand what will be the consequences if we establish deep relationships with digital entities.

The phenomenon of “Synthetic relationships” It relies on the promise of instant empathy, availability 24/7 and affordable rate. Users from all over the world are using Chatbots and custom assistants not only to organize their agenda, but also to process complex emotions such as duel or anxiety. A paradigmatic example comes from South Africa, where the shortage of mental health professionals (a psychologist for every 100,000 people) has led many to seek help in language models thatalthough it does not guarantee absolute privacy, they offer a space without judgment to vent. The urgency of attention leads to overlooking the line between punctual support and emotional dependence.

Several innovations have fed this trend: the appearance of GPTS personalized and improvements in reasoning models that enrich the answers with intermediate explanations. To this are added voice commands that expand the interaction while we drive and cost much more accessible than only twelve months ago. Thanks to these improvements, AI enters into land traditionally reserved for psychology, and users, amazed by their capacity, can prefer it before a human interlocutor.

The technical turn to the emotional sphere is illustrated in the podium of the cases: first, the therapy and the company; second, the organization of daily life; Third, the search for purpose. These three essential aspirations of the human condition – clarify internal injuries, order everyday chaos and find a meaning – reflect a desire for self -realization that transcends productive efficiency. When AI stops responding only to logical instructions and begins to caress our fears and ambitions, we enter an area where risk and opportunity live together.

In the short term, many users experience real benefits: They gain clarity in their goals, improve habits, reinforce learning processes and even receive support to design travel itineraries or appeal legal fines. But these positive effects hide a dark side. Excessive dependence can undermine the personal initiative by replacing intrinsic motivation for the comfort of immediate responses. The AI makes us more efficient, but it steals the ability to face the creative vacuum and the challenge of uncertainty.

The normalization of artificial references of emotional support can also reduce our resilience. In front of one real crisis -A discussion, a professional failure, an unexpected duel- the response of an AI will always be designed to comfort us, without causing the necessary discomfort that fosters personal maturation. By softening each conflict, we run the danger of forging fragile individuals, lacking the strength to navigate the inevitable ups and downs of human life.

In turn, the issue of privacy does not stop gaining relevance. Many users express distrust of the massive use of their data by large platforms. On the one hand, the excessive collection of personal information is criticized; On the other, it is regretted that the systems do not keep enough memory to offer a more coherent accompaniment. This dichotomy highlights the contradiction between the desire for intimacy with AI and the fear of the surveillance of Big Tech.

Looking towards the future, it is worth asking how these dynamics will affect our social and emotional behavior. If we accept that a good part of our affective needs are satisfied with algorithms, we could attend a displacement of face -to -face human interaction towards synthetic bubbles. This would have a deep impact on the structure of communities and collective mental health, modifying norms of coexistence and reducing activity in face -to -face discussion spaces. If we cross the decline in global birth rates, the emotional detachment of social networks and the now complacent virtual couples, we have the perfect recipe for a new dystopian chapter of your favorite science fiction series.

To mitigate these risks it is essential to establish design safeguards. An option is to educate with respect to the limitations of the models and introduce time limits into the AI sessions, so that the user receives alerts when overcoming a configured threshold. We must incorporate clear reminders about the artificial nature of the interlocutor, reinforcing the awareness that it is a simulation and not an authentic human bond. In addition, It is vital to develop educational programs that include training in digital discernment and emotional management in a environment mediated.

In addition, it is advisable to combine the use of automatic agents with human support spaces. Hybrid models will allow alternating AI sessions with face -to -face or virtual meetings with mental health professionals and support groups. In this way, the accessibility and availability of technology is maintained without giving up the richness of human dialogue, guaranteeing an expert supervision that detects signals of excessive dependence.

Finally, the creation of regulatory frameworks and ethical standards will be essential. International governments and organizations must collaborate to define transparency policies in the use of data, security protocols and informed consent requirements. The technology industry, meanwhile, must commit to independent audits and certifications that guarantee responsible practices in the development of emotional accompaniment systems.

The real challenge is to find the balance between the transformative potential of AI and the irreplaceable need for human contact. Only in this way can we take advantage of the comfort and scope of a virtual tutor without giving up our essence: the encounter with the other, with its defects and virtues, the unpredictable and the imperfect, which finally builds us as complete human beings.

CEO of Varegos and University and Secondary Teacher specialized in AI.

Source: Ambito

David William is a talented author who has made a name for himself in the world of writing. He is a professional author who writes on a wide range of topics, from general interest to opinion news. David is currently working as a writer at 24 hours worlds where he brings his unique perspective and in-depth research to his articles, making them both informative and engaging.