Large-Scale Language Models (LLMs) such as ChatGPT are transforming the way we communicate and obtain information; however, these technological advances face significant challenges in preventing biases, particularly those affecting the LGBTQI+ community and other diverse groups.

In this sense, the study The AI Index Report 2024 by Stanford University reveals that in The data used to train LLM is predominantly gender and racially biased. Likewise, the warnings in the latest UNESCO report, in March of this year, have pointed out this problem related to regressive gender stereotypes. This reality can lead to perpetuating them, and in turn excluding the wide variety of voices. In addition, a report by McKinsey & Company points out that organizations that They have equipment diverse, inclusive, and equitable They are more efficient, productive, innovative and creative.

In this context, a concern that arises in the community is how to extend this commitment assumed in the real world of social justice and human rights to the virtual realm in general and, specifically, in the development of advanced technologies such as LLMs so that they produce results that contemplate all diversities in order to be fully representative of society.

To achieve this goal, it is vital to consider several key aspects. From an economic perspective, the current job market for computing in general and for these systems in particular is not large enough to meet all technological needs. Promoting diversity helps to bridge this gap by attracting more people from different backgrounds, as well as promoting the generation of more ingenious and effective solutions to address society’s most critical challenges. Furthermore, in terms of justice and equity, potential biases in data sets can lead to problematic outcomes if not properly addressed.

It is therefore crucial that AI models are constantly audited and reviewed to identify and mitigate biases, which generally have to do with the association of certain professional roles or emotions with specific genders. This predisposition can also be extrapolated to other aspects of identity, such as sexual orientation and gender identity.

When AI “hallucinations” are not fully inclusive

“Hallucinations” in LLMs represent a serious problem, since these systems generate responses that, although syntactically and semantically correct, are disconnected from reality.

One of the most notable recent cases was Google, which suspended the creation of images of people with AI after it was discovered that, when asked for an image of the Pope, the tool generated results that included a black man and an Indian woman. For this technology giant, the launch of Gemini has raised potential legal and social problems due to the incorrect use of this technology.

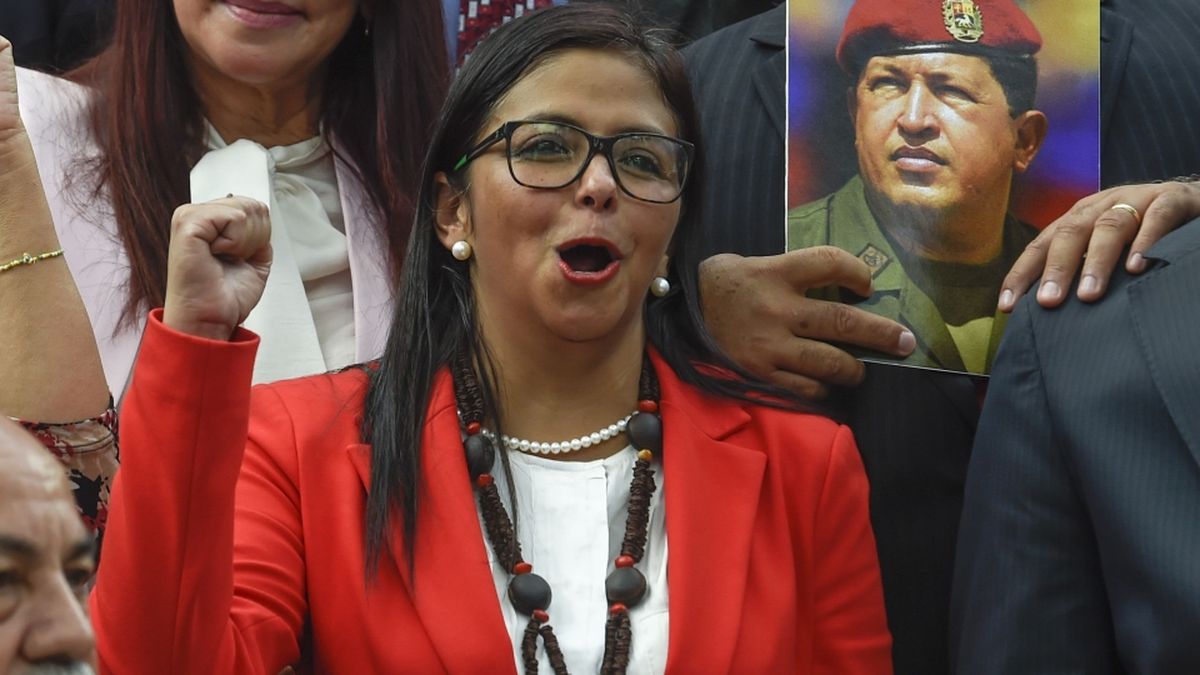

Another example is when AI models incorrectly generate quotes and statements about LGBTQI+ issues. For example, a model might wrongly claim that a public figure, known for their support of LGBTQI+ rights, has made negative statements about the community due to a lack of diverse and accurate data in its training. In another circumstance, it might also spread false quotes about sexual orientation, based on stereotypes found within the data.

These hallucinations are currently receiving special attention, as it is very easy to create false content for political and malicious purposes, such as fake news about unreal situations or photos that have been altered to distort history. There is growing concern that the future of the Internet may be saturated with untrue information.

In this sense, it is essential for technology companies to strive to promote equality in their products by finding a balance between being accurate and inclusive. Organizations must be careful when programming AI models to take into account both variables without making inaccuracies and biases. To ensure inclusive technology, it is essential to implement concrete actions, such as using different sources in LLM training, to ensure fair representation of all identities.

Lack of diverse representation in training data could perpetuate current inequalities, failing to accurately reflect the true makeup of society. The fight for algorithmic justice is, at its core, an extension of the fight for human rights. We need to make sure that the technology we build is equitable, so that it serves everyone and excludes no one.

Another crucial measure is the training of developers in these practices, for which there are various tools and resources available that can assist companies and their professionals in detecting biases in their language models.

It is important to reaffirm our commitment to diversity, equity and inclusion, not only in our physical spaces, but also in the digital world. Together, we can build technology that not only reflects our diversity, but also celebrates and empowers it. Companies and professionals in the technology sector must join this effort for a more inclusive AI. By moving in this direction, we build a more fair and equitable digital world for all. Inclusive technology is essential to reflect and respect human diversity in all its forms.

Global Marketing and Communication Director at NEORIS. Member of the Forbes Council, the Spanish Marketing Association and REDI LGTBIQ+

Source: Ambito

David William is a talented author who has made a name for himself in the world of writing. He is a professional author who writes on a wide range of topics, from general interest to opinion news. David is currently working as a writer at 24 hours worlds where he brings his unique perspective and in-depth research to his articles, making them both informative and engaging.