The phenomenon of the Deepfakes – counter -consideration falsified with artificial intelligence – treated brutally in the courtyard of our societies, altering public perception and raising serious ethical and moral challenges. While there is much talk about the benefits of AI, with thousands of positive applications, its improper use worries more and more, especially when it affects minors and feeds the phenomenon known as post -truth.

The term Deepfake arises from the combination of “Deep Learning” (deep learning) and “fake” (false), and refers to images, videos or audios generated by sophisticated algorithms that manage to imitate with surprising accuracy to real people. Its impact goes from the creation of false sexualized content to the dissemination of news and fictional statements, which seriously affects the collective perception of reality.

Recently, multiple cases have been known where adolescents use these technological tools to create false sexual images of classmates, generating deep emotional traumas and severe bullying situations.

The psychological damage caused by the circulation of these materials among minors is significant and persistent. According to recent studies, victims of these Deepfakes face stress, anxiety and depression, exacerbating problems of self -esteem and personal trust. Adolescent psychology specialists recommend an early educational intervention and awareness programs that include both students and teachers.

But the impact of Deepfakes is not limited to the school environment or minors. Adults worldwide are also constantly exposed to manipulated content, facing a growing difficulty in discerning between real and content made with AI. This phenomenon feeds “post -truth”, defined by the Oxford Dictionary as a situation where objective facts have less influence on public opinion formation than personal emotions and beliefs. In this way, we become echo cameras of the lie generated by others.

The massive circulation of false news (fake news) reinforced with the visual credibility of an Deepfake potentially has disastrous consequences for society. From misinformation campaigns during electoral processes to the creation of social and political conflicts, the ease with which false content can be generated and distributed immediate attention and clear regulation.

This phenomenon is not new. Hundreds of global organizations have been alert to us for several years, which were enhanced by the arrival of social networks, now exacerbated by generative IAS. The abundant contamination of false content, whose only purpose is to achieve interaction, translates into a growing apathy towards the consumption of information: as everything can be a lie, nothing is true.

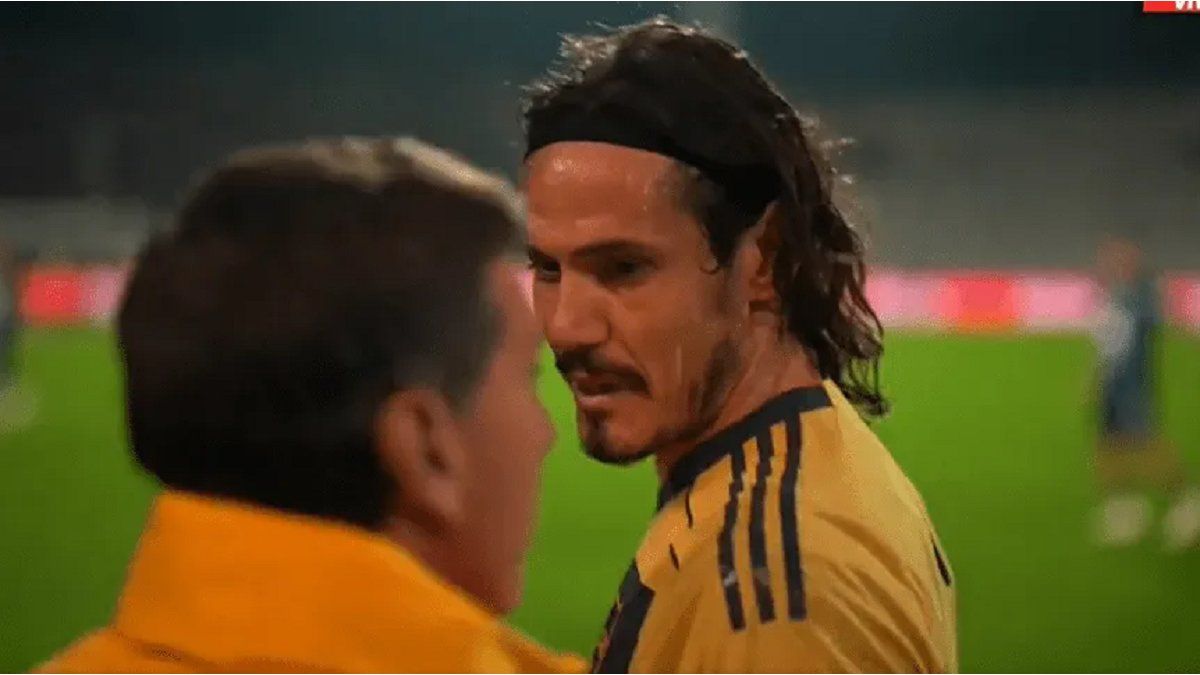

As if all this were not enough, the rise of sophisticated scams through Deepfakes proposes an even more complex scenario. Advanced audiovisual falsification techniques allow scammers to accurately imitate both authority figures, from companies executives to political leaders, and direct relatives of common people.

Deepfakes exploit our cognitive biases such as trust, Temporary pressure and urgency, confirmation, and visual and auditory realism. They point as basic and deep pillars of our primal psychology.

The responsibility to counteract this phenomenon is shared. Digital platforms and social networks have the moral and technical obligation to detect and block false content generated by AI. At the same time, educational institutions must prioritize critical training in digital media from an early age. And we, as active members of society, have the moral duty to strive to separate the straw from wheat.

Promoting digital literacy and awareness of artificial intelligence is undoubtedly the best strategy to prevent, but reality urges us with short -term tactics to combat this phenomenon:

- When consuming news, seek the validation of it in at least two sources of trust. Let us take a moment before forwarding something to another person, to verify not only the existence of the topic, but also the interpretation of the source that appoints it.

- Recommend minors to have private social media profiles, limiting access to their images and videos of malicious third parties.

- Build with our close relatives a security words system that allow us to immediately validate the identity of whom we talk to.

The central challenge is to achieve a balance between technological innovation and social ethics. Our individual capacity to discern the truth is the last line of defense. It is vital to generate citizen awareness so that people learn to question and verify what they see, listen and share online.

Secondary, University and CEO of Varegos

Source: Ambito

David William is a talented author who has made a name for himself in the world of writing. He is a professional author who writes on a wide range of topics, from general interest to opinion news. David is currently working as a writer at 24 hours worlds where he brings his unique perspective and in-depth research to his articles, making them both informative and engaging.