I have been working in the news industry for over 6 years, first as a reporter and now as an editor. I have covered politics extensively, and my work has appeared in major newspapers and online news outlets around the world. In addition to my writing, I also contribute regularly to 24 Hours World.

Menu

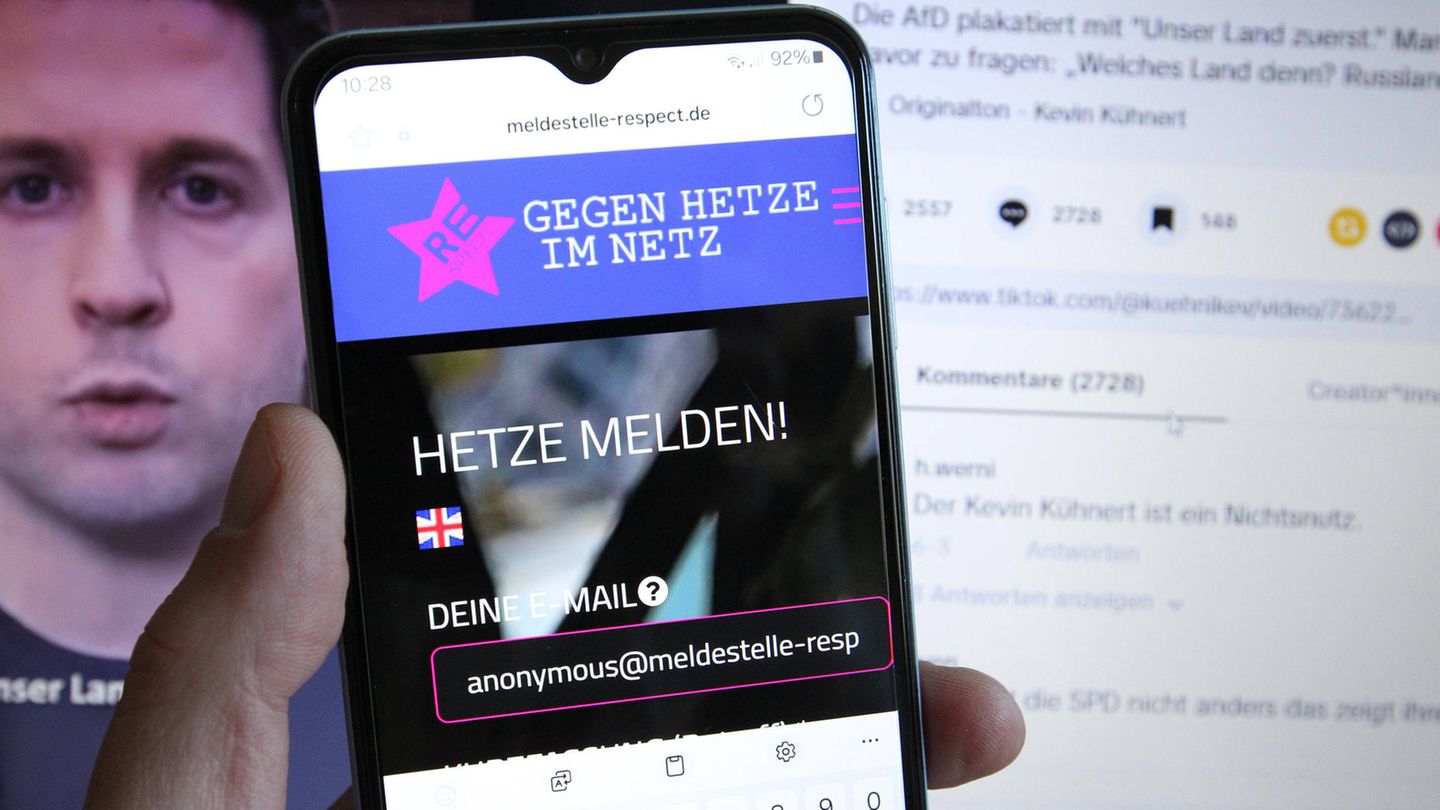

Digital reporting points: This is what the debate about Trusted Fahner is about

Categories

Most Read

Donald Trump announces meeting with Vladimir Putin in Budapest

October 16, 2025

No Comments

Gaza peace deal: This is how Europe can regain its influence

October 16, 2025

No Comments

Donald Trump receives Zelensky – but first calls Putin

October 16, 2025

No Comments

Friedrich Merz and the cityscape: A sentence that will hunt him

October 16, 2025

No Comments

Defense: Compromise tones at the first military service consultation in the Bundestag

October 16, 2025

No Comments

Latest Posts

The UN asked Israel to open more border crossings in Gaza to guarantee the entry of humanitarian aid

October 16, 2025

No Comments

October 16, 2025 – 19:05 Meanwhile, Prime Minister Benjamin Netanyahu stated that they are evaluating reopening the Rafah crossing next Sunday, he announced. The United

If they continue to murder, we will have no choice but to kill them

October 16, 2025

No Comments

October 16, 2025 – 18:31 This Wednesday, US Central Command Brad Cooper “strongly” urged Hamas to cease the “violence and shooting against innocent Palestinian civilians

The rare workout that is effective for people over 65: works forgotten muscles

October 16, 2025

No Comments

October 16, 2025 – 18:30 A useful and little-known exercise to lead a fitness life and strengthen parts of the body that are not so

24 Hours Worlds is a comprehensive source of instant world current affairs, offering up-to-the-minute coverage of breaking news and events from around the globe. With a team of experienced journalists and experts on hand 24/7.